Parents can rest assured that the Online Safety Act will hold social media companies accountable not only for illegal content on their platforms, but also for any material likely to cause “serious trauma” to children.

In an open letter, Culture Secretary Michelle Donelan sought to allay concerns that long-delayed legislation was being watered down After finally returning to Parliament.

Proposed laws aimed at regulating online content to help keep users, especially children, safe and hold companies accountable for material – were amended amid concerns about its impact on free speech.

It was tweaked to remove the responsibility for social media sites to remove “legitimate but harmful” material, which had been criticized by free speech campaigners.

Instead, social media platforms will provide tools to hide certain content — including content that doesn’t meet the criminal threshold but could be harmful.

This includes eating disorder content, Sky News investigation finds recommendations via TikTok’s suggested search feature despite not searching for explicitly harmful content.

Writing to parents, carers and guardians, Ms Donelan said: “We have seen so many innocent childhoods ruined by this kind of content and I am determined to put these important things to your children and loved ones as quickly as possible. protection written into the law”

read more:

Why the Online Safety Act is so controversial

The Online Safety Act May Not Be Too Little, But It’s Surely Too Late

What’s in the Online Safety Act?

The letter outlines six measures the bill would take to crack down on social media platforms:

• Remove illegal content, including child sexual abuse and terrorism

• Protect children from harmful and inappropriate content such as cyberbullying or promotion of eating disorders

• Imposes a legal obligation on companies to enforce their own age limit, most companies are 13

• Get companies to use age checks to protect children from inappropriate content

• Posts that encourage self-harm will be made illegal

• Companies will be required to publish risk assessments of potential dangers to children on their websites

Ms Donelan said companies could face fines of up to £1bn if they were found to be substandard, and could see their websites blocked in the UK.

The updated legislation comes as platforms push back against a similar online child safety law in the U.S. state of California, which requires verification of users’ ages.

NetChoice, an industry group whose members include Meta and TikTok, is filing the lawsuit, saying it seeks to have “online service providers act as roving Internet censors at the behest of the state.”

What are critics saying about the Online Safety Act?

Ms Donelan’s letter follows criticism of the amendments, including from Molly RussellWho Coroner rules death from self-harm while suffering “negative effects from online content”.

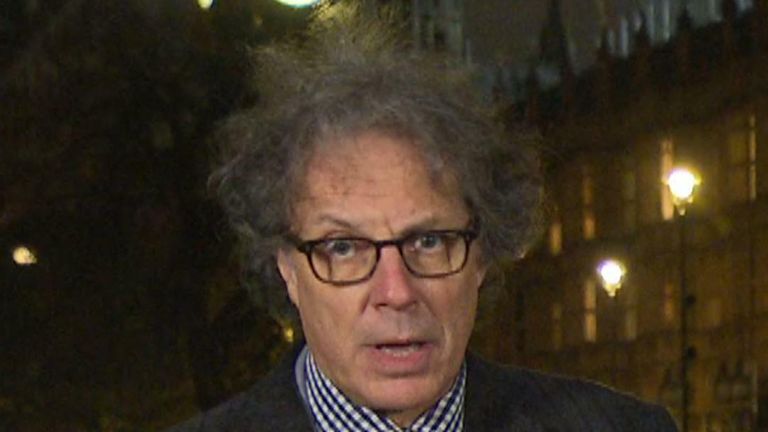

“I’m concerned – removing the whole clause is hard to see in other clauses,” Ian Russell told Sky News.

“The secretary of state promised that the bill would be strengthened in terms of provisions to keep children safe, but the bill has changed in other ways.

“I wonder what would happen if the kids figured out a way around the dam and into the adult area.”

Anyone feeling emotionally distressed or suicidal can call Samaritan For assistance call 116 123 or email jo@samaritans.org. Alternatively, letters may be mailed to: Freepost SAMARITANS LETTERS.